An AI agent is a software smart enough to look around its environment, observe it, figure things out, make decisions, and act autonomously to reach a specified goal.

In this article, our goal is to throw light on the basic components of an ai agent, dive deep into how these components work, without getting bogged down by super technical jargon.

Let’s dive in.

Are all AI agents cognitive?

No.

AI agents can understand their surroundings, make choices, and act on their own to achieve goals. They have different levels of smartness, but they all work without needing people to tell them what to do constantly.

The parts that help them think, decide, and work together make them independent and intelligent.

However, the term "AI agent" is quite broad. It comprises a wide range of systems with varying levels of intelligence and autonomy.

While the field of AI is increasingly focused on developing more cognitive and intelligent agents, not all AI agents are cognitive.

Many practical and widely used AI systems operate effectively based on simpler, non-cognitive principles. The complexity and cognitive abilities of an AI agent are typically determined by the specific task it is designed to perform and the environment in which it operates.

Which brings us to a question: how are AI agents different from AI systems?

AI agents vs AI systems

To clarify the distinction between AI systems and AI agents, it's essential to understand their definitions, functionalities, and roles.

While both AI systems and AI agents are integral to the field of artificial intelligence, they serve distinct purposes.

Key components of an AI agent (agents)

.png)

These five essential components of AI agents are what enable them to undertake any complex task easily.

Let’s understand these key components through an example.

Imagine you want to automate the complex tasks of end-to-end invoice processing. You want to deploy AI agents to do that. We’ll cover each component of AI agents and see how they execute the task.

Perception (Input handling)

Perception is all about how agents gather and process data from their environment. It’s like how the agents handle the inputs they get. This information can come from a huge range of places. It includes everything from what you type or say to a chatbot, which is a user query, to APIs pulling in live data, and giant databases.

An important thing to remember is that it's not just about scooping up all this raw data; this raw information needs to be cleaned up, organized, and turned into something the agent can actually use.

And that's where other smart technologies come into play, like natural language processing (NLP), sentiment analysis, etc. It helps understand text (unstructured data) and figure out if a piece of text sounds happy or angry.

An AI agent’s ability to perceive helps it use sensory data to understand its surroundings. This helps them recognize objects, patterns, and important details for making decisions. For example, a smart home AI can see someone enter a room and turn on the lights.

AI agents use perception to spot patterns in data, like recognizing objects or understanding what you mean when you type to a chatbot. This helps them make better decisions.

They use perception to interact with users too. For example, voice recognition lets virtual assistants understand and respond to spoken commands, improving user experience in areas like customer service and personal help.

The above examples point to the fact that there are different types of perception within AI agents.

Types of perception

- Visual: It's about interpreting visual data from cameras, enabling agents to recognize objects and understand spatial relationships.

- Auditory: This involves processing sound data, allowing agents to recognize speech and environmental sounds.

- Tactile: Here, the agents interpret touch data, enabling agents to sense physical contact and manipulate objects.

- Multimodal: AI agents integrate inputs from multiple sensory modalities to enhance understanding and decision-making. For example, combining visual and auditory data can improve object recognition in complex environments.

Our example - Your agent receives the PDF invoice via email. It parses the document using OCR and NLP. It detects fields: invoice number, vendor name, due date, line items, tax ID, totals. It cross-references invoice data with purchase orders and delivery receipts from internal systems.

So, now that the agent has taken all this information in, it has to remember things.

Memory (Contextual recall)

Memory is vital.

It gives the agent context and makes interactions feel personal. It’s sort of a key to contextual intelligence. You don't want every conversation to start from scratch. The agent needs to recall what happened before—retaining knowledge from past actions or interactions.

There are mainly two kinds of memory involved here.

- Short-term memory

- Long-term memory

Short-term memory is like the agent's immediate working memory. It remembers what you just said in a conversation, for instance. And this allows for contextual learning. The agent uses the recent info to understand the current situation better.

For example, ChatGPT's chat history within a session allows for context-aware conversations. It stores recent data. This improves short interactions but doesn't save information long-term for continuous learning across sessions.

In long-term memory, however, the information is stored over longer periods across different interactions. This helps the agent in remembering your preferences or past questions you've asked. This is usually done by using vector databases and RAG.

A vector database is an efficient way to store and quickly get back this kind of long-term information. Think of it like a super-organized library where similar ideas are stored near each other.

It makes finding things fast. Also, these agents might prioritize new information and specifically log that in their long-term memory to recall later. It's a clever way for them to keep updating their knowledge base.

So, keeping the memory well structured and reliable over time is crucial. It helps the agent to work efficiently.

Our example - The agent recalls vendor payment history and PO metadata. It remembers the last time this vendor had an overbilling issue. The agent knows this vendor is part of a preferred supplier group (affects approval speed). It references internal rules: payment terms, approval thresholds, and previous exceptions.

Now, what does it do with all the memory it has? It starts reasoning.

Reasoning (Decision making)

Reasoning in AI agents is fundamental to their ability to make autonomous decisions, analyze complex situations, and optimize task execution. It enables the agents to process information in a way that mimics human cognitive functions.

Reasoning allows AI agents to analyze data and make decisions without human intervention. This capability is crucial for applications that require real-time responses, such as customer service automation or financial trading.

In fields like healthcare, reasoning enables AI agents to diagnose medical conditions by correlating symptoms with medical knowledge.

Through reasoning, AI agents can minimize errors, such as those caused by misinterpretation of data (hallucinations). The agents can evaluate the quality of information before making decisions, leading to more reliable outcomes.

More advanced agents can look at different ways to solve a problem and then refine their approach as they learn more.

Types of reasoning in agents:

- Deductive: This involves drawing specific conclusions from general principles or facts. It is often used in expert systems where rules are applied to reach conclusions based on known information.

- Inductive: This type of reasoning allows agents to make generalizations based on specific observations. It is useful for pattern recognition and predictive analytics.

- Abductive: This reasoning method formulates the most likely explanations based on available evidence. It is particularly useful in diagnostic applications, such as identifying diseases from symptoms.

- Analogical: This involves drawing parallels between different situations to apply knowledge from one context to another. It helps agents leverage past experiences to inform current decisions.

Reasoning is what makes AI agents truly agentic. It lets them figure out how to reach their goals. By using language models, tools, and memory, reasoning allows agents to handle complicated situations effectively.

This makes them very useful for automation and improving complex processes.

Our example - The agent detects that this invoice is for a duplicate PO—triggers an internal flag. Based on company rules, it checks if the invoice total exceeds approval limits. It identifies missing delivery confirmation, so it decides not to process it, yet.

Okay, so the agent has perceived, remembered, reasoned, and communicated. It has figured out what to do. It's time to take action.

Action component (Execution of tasks)

The action component is where the execution happens. Actions are the actual steps AI agents take to interact with their environment to achieve goals, allowing them to work autonomously.

The ability to use external tools, datasets, APIs, and other automated systems is what really expands an AI agent's capabilities beyond just its internal knowledge and reasoning.

An AI agent for customer service could access customer information, suggest relevant help articles, or escalate complex problems to a human agent.

It's like giving the agent access to this whole external suite of resources and abilities. So we're talking about things like — getting info from databases, making changes to those databases, sending emails, etc.

Tool calling

Tool calling is the ability of AI agents to interact with external tools, APIs, or systems to enhance their capabilities. This functionality is crucial for enabling agents to perform complex tasks beyond their native capabilities.

Here are the main aspects of tool calling:

- Integration with External Tools: AI agents can seamlessly integrate with various digital tools and services, such as Gmail, Slack, and Google Drive. This allows them to automate workflows, retrieve real-time data, and perform specialized tasks like scheduling or data analysis.

- Autonomous Decision-Making: The agents can analyze context and determine the best course of action autonomously. This reduces the need for manual oversight and allows for more efficient task execution.

- Enhanced Functionality: Tool calling enables agents to fetch real-time information, execute functions, and perform multistep problem-solving. For example, an AI personal assistant might use tool calling to check the weather, manage calendar events, and send emails—all in one interaction.

- Security and Authentication: Many external tools require authentication for access. AI agents can use secure methods, such as OAuth, to authenticate and interact with these tools safely. This ensures that user credentials are protected while allowing the agent to perform necessary tasks on behalf of the user

Communication

This component of an AI agent helps it to collaborate with humans or other AI agents. Communication is key for AI agents, especially when many work together. It lets them share information, decide smartly, and achieve common goals.

AI agents share data they gather to improve their understanding and make better decisions in real-time. For example, in logistics, an agent can inform others about stock levels to adjust delivery schedules.

AI agents work together smoothly through communication. In complex tasks, such as autonomous vehicle navigation or industrial automation, agents must work together, sharing insights and strategies to optimize performance.

Communication helps AI agents improve how they act by learning from shared information. This makes them more aware and able to change when things around them change. For instance, in a smart city, traffic agents can change traffic based on weather updates from other agents.

In multi-agent systems, each agent can focus on a specific part of a problem. When they communicate well, these specialized agents can share what they've learned, solving problems more quickly and effectively. For example, one agent could analyze data, while another carries out actions, and their communication ensures they work together smoothly.

AI agents use communication rules to discuss and solve disagreements when they want different things. This is especially useful when agents compete. Organized ways of talking, like dialogue systems, help them have detailed talks and come to deals.

AI agents utilize various methods to communicate:

- Message Passing: Agents send and receive messages formatted in structured data types (e.g., JSON, XML) to convey information. This method can be synchronous (waiting for a response) or asynchronous (fire-and-forget) depending on the application.

- APIs: APIs serve as standardized interfaces for agents (agent-centric interfaces) to exchange data and functions, facilitating integration across different systems and enhancing interoperability.

- Agent Communication Languages (ACLs): These formal languages provide structured frameworks for clear and unambiguous communication. Examples include FIPA-ACL and KQML, which help agents coordinate complex tasks effectively.

- Event-Driven Communication: Agents can respond to real-time events, adapting their actions based on changes in their environment. This dynamic communication is essential for applications requiring immediate responses.

Our example - The agent generates a decision: “Hold for verification” and notifies the procurement team. It updates the ERP system to mark invoice status as “Pending – Verification Required.”. Additionally, it sends a slack message to the procurement lead. If cleared later, it auto-routes for approval and schedules the payment in the AP system. Logs all actions in the audit trail and internal knowledge base.

Now, a key part of being intelligent, obviously, is getting better over time by learning.

Learning (Continuous improvement)

Learning is about improving agents’ responses over time using new data. Much like humans.

It is crucial for their ability to adapt, improve, and perform effectively in dynamic environments. Learning enables these agents to enhance their performance over time by acquiring knowledge from their experiences and interactions.

Learning allows AI agents to adapt to new situations and challenges. Unlike traditional AI systems that operate based on static rules, learning agents can modify their behavior based on feedback and new data.

AI agents utilize various learning methods to refine their decision-making processes and improve their performance. Key learning approaches include:

- Supervised: Agents learn from labeled datasets where the correct outputs are known. This method is often used for tasks like classification and regression, allowing agents to make accurate predictions based on past examples.

- Unsupervised: In this approach, agents analyze unlabeled data to identify patterns or groupings. This is useful for applications like customer segmentation or anomaly detection, where the agent needs to discover insights without explicit guidance.

- Reinforcement: Agents learn through trial and error by interacting with their environment and receiving feedback in the form of rewards or penalties. This method is particularly effective for complex tasks, such as game playing or robotic navigation, where agents must develop strategies over time.

Our example- The agent logs this invoice pattern as a common exception. It fine-tunes its decision rules to flag similar vendor behavior earlier. It updates its model for duplicate invoice detection based on feedback from the team. The next time, it autonomously pre-checks for duplicate PO before engaging finance.

Now that the core components of AI are clear, the next step is to understand how they work.

How do these five core components work together?

To understand how the components of AI agents work together, it's important to explore their architecture and the interactions. Because these two elements enable the agents to function autonomously.

Want to know more about agentic architecture? Check out this one 👇

AI agents use perception to understand their surroundings, reasoning to make decisions, action to interact, and learning to improve.

These parts work together so the agents can act on their own, handle complex tasks, get better over time, and be useful in many areas, like customer service and self-driving cars.

Understanding how these components interact is key to building effective AI solutions for businesses.

Let’s summarize

Imagine systems that don't just follow instructions but anticipate needs, solve novel problems, and proactively drive outcomes. This shift empowers your teams to focus on strategy, creative problem-solving, and direct customer engagement. Rather than getting bogged down in repetitive or complex operational tasks.

That’s why AI agents are truly powerful and transformative technology, already having a major impact across different industries. And having a solid understanding of these fundamental components is key to fully grasping their potential.

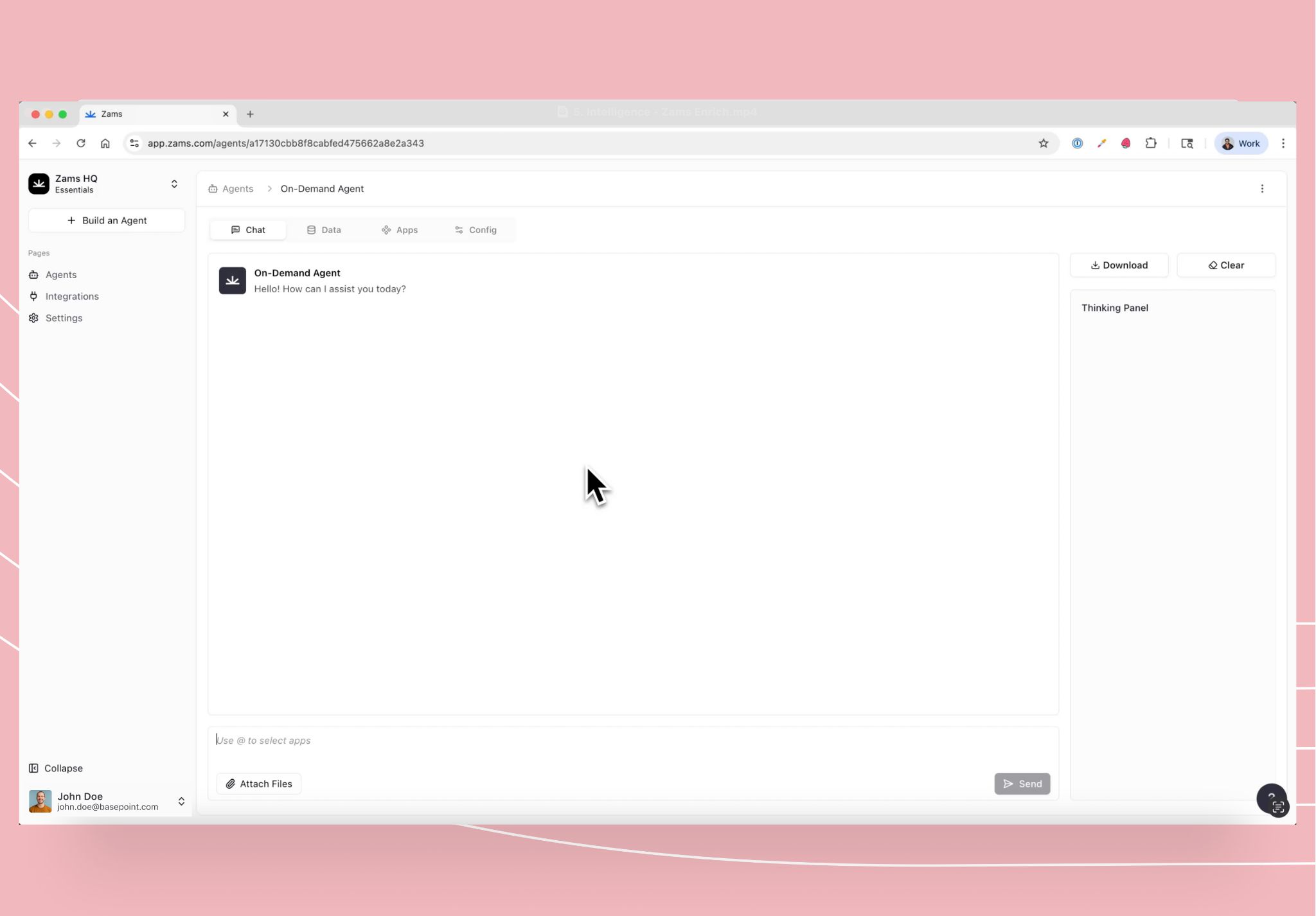

Try it with Zams

Zams streamlines the rollout of enterprise-grade multi-agent automation, letting teams bypass the technical burdens of infrastructure management or model calibration. Simply specify your targets and context. Zams handles the orchestration, connecting systems, maintaining memory, managing errors, and tracking workflow versions automatically.