Something we get asked about a lot is the difference between training data and testing data. It’s so important to not only know the difference, but ensure you’re using both the right way.

In this article, we’ll compare training data vs. test data and explain the place for each in machine learning models, why data preparation matters, and how to balance accuracy with speed.

What is Machine Learning Training Data?

Machine learning uses algorithms to learn from structured or unstructured datasets. They find patterns, develop understanding, make decisions, and evaluate those decisions.

In machine learning, datasets are split into two subsets.

The first subset is known as the training dataset - it’s a portion of our actual dataset that is fed into the machine learning model to discover and learn patterns. In this way, it trains our model.

The other subset is known as the testing data. We’ll cover more on this below.

Training data is typically larger than testing data. The reason is simple: the more examples a model sees, the better it becomes at recognizing meaningful relationships between input features and outcomes.

Once data from the dataset is fed to the algorithm, the system learns and makes decisions based on that information. Algorithms enable machines to solve problems from past observations, just like humans, but at scale and with far more examples.

As models are exposed to more machine learning training data, they improve over time. They reduce bias, adapt to variability, and start recognizing edge cases that improve predictive accuracy.

Your training data will vary depending on whether you’re using supervised learning (with labeled outcomes) or unsupervised learning (where patterns are found without labels). We cover the differences between these two in our blog post on machine learning vs. artificial intelligence.

To summarize: Your training data is a subset of your dataset that you use to teach a machine learning model to recognize patterns or perform your criteria.

Keep Your Machine Learning Data Clean and Ready for Action

Machine learning models are only as good as the data behind them. Zams automates CRM updates, syncs datasets across systems, and keeps every record accurate, so your team builds on data you can trust.

What is Testing Data?

Once your machine learning model is built (with your training data), you need unseen data to test your model. This data is called testing data, and you can use it to evaluate the performance and progress of your algorithms’ training and adjust or optimize it for improved results.

Testing data has two main criteria. It should:

- Represent the actual dataset

- Be large enough to generate meaningful predictions

Because the model has already “seen” and learned from the training data, the testing data must be completely new and unseen. That’s the only way to know if your algorithm can generalize correctly to fresh, real-world data.

In practice, data scientists often split their datasets 80% for training and 20% for testing.

Note: In supervised learning, the outcomes are removed from the actual dataset when creating the testing dataset. They are then fed into the trained model. The outcomes predicted by the trained model are compared with the actual outcomes. Depending on how the model performs on the testing dataset, we can evaluate the performance of the model.

Why Knowing the Difference is Important

The difference between training and testing data is simple:

- One teaches your model how to make predictions.

- The other validates if those predictions are correct.

But confusion happens when teams mix or reuse data incorrectly. That’s when overfitting appears, when a model memorizes training data instead of learning patterns that generalize.

Knowing the difference prevents wasted time, inaccurate insights, and poor business decisions. High-quality machine learning training data ensures your model learns effectively; well-designed test data ensures it performs reliably in production.

At Zams, we see this principle in action every day. Clean, structured data is the engine behind better automation, sharper forecasting, and more predictable revenue.

Now that we’ve covered the differences between the two, let’s dive deeper into how training and testing data work.

How Training and Testing Data Work

Machine learning models are built off of algorithms that analyze your training dataset, classify the inputs and outputs, then analyze it again.

Trained enough, an algorithm will essentially memorize all of the inputs and outputs in a training dataset, this becomes a problem when it needs to consider data from other sources, such as real-world customers.

The training data process is comprised of three steps:

- Feed - Feeding a model with data

- Define - The model transforms training data into text vectors (numbers that represent data features)

- Test - Finally, you test your model by feeding it test data (unseen data).

When training is complete, then you’re good to use the 20% of data you saved from your actual dataset (without labeled outcomes, if leveraging supervised learning) to test the model. This is where the model is fine-tuned to make sure it works the way we want it to.

In Obviously AI, the entire process (training and testing) is conducted in a matter of seconds, so you don’t have to worry about fine-tuning. However, we always say that it’s always good to know what’s happening behind the scenes so it’s not a black box.

How Much Training Data do You Need

We get asked this question a lot, and the answer is: It depends.

We don't mean to be vague, this is the kind of answer you'll get from most data scientists. That's because the amount of data required depends on a few factors, such as:

- The complexity of the problem

- The structure and type of the learning algorithm

- The quality and balance of your training data set

In Obviously AI, we always say: the more data, the better. That's because the more you train your model, the smarter it will become. But so long as your data is well prepared, follows a basic data prep checklist, and is ready for machine learning, then you'll still achieve accurate results. And, with our platform, those accurate results are generated in seconds.

High-quality machine learning data leads to faster learning curves, fewer errors, and more stable predictions. With the right balance of training and test data, models adapt more efficiently and require fewer retraining cycles over time.

Validation Data: The Often-Overlooked Middle Layer

Between training and testing sits validation data, a smaller subset used during model tuning. It helps data scientists tweak hyperparameters and optimize performance without overfitting.

Validation datasets act as a safety net. They let you evaluate performance before exposing the model to final test data. This ensures accuracy, repeatability, and confidence in production results.

Including validation data strengthens your data split strategy, and Google’s algorithms now reward content that captures this kind of contextual completeness.

Common Pitfalls in Using Training and Test Data

Even experienced teams can run into issues when preparing datasets. Here are the most common mistakes:

- Data leakage: When test data accidentally influences the training process.

- Unbalanced datasets: When some classes or outcomes dominate the training data.

- Improper data splitting: Using random or inconsistent ratios that distort results.

- Ignoring feature relevance: Feeding irrelevant or redundant variables that confuse the model.

Avoiding these pitfalls helps models stay adaptable and ensures consistent model performance across changing real-world data.

Real-World Example: Predicting Customer Churn

Let’s apply this concept to a real scenario.Imagine you’re building a model to predict customer churn. The training dataset includes thousands of past customer profiles with behavior patterns and outcomes (churned or retained).The test dataset contains a fresh batch of customer data from the most recent quarter, data the model hasn’t seen.If the model performs well on that unseen dataset, you’ve trained it correctly. If not, you may need to revisit your feature selection, expand your training data set, or balance your data distribution.

Why Zams?

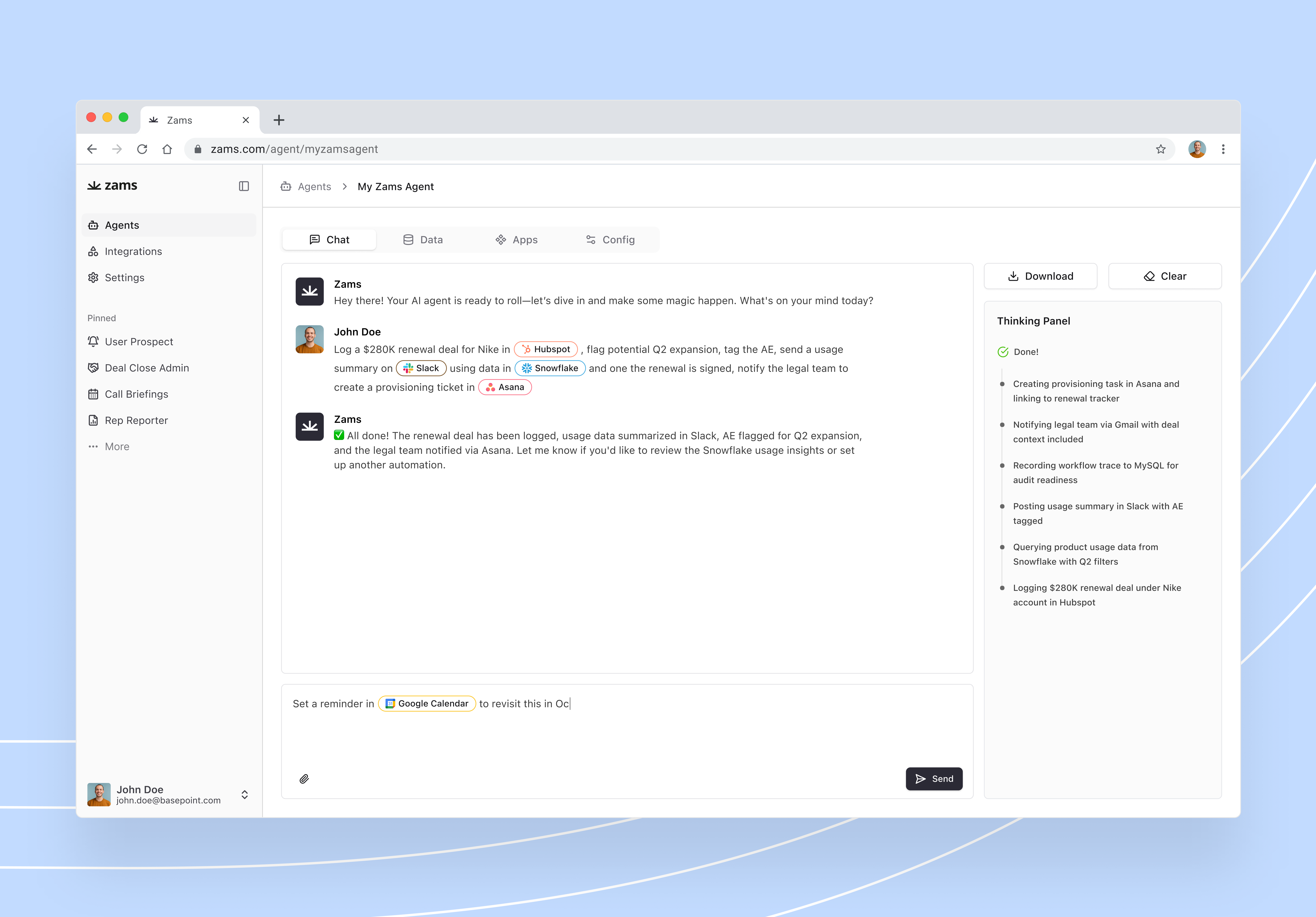

Zams is not just another tool, it’s your AI Command Center for clean data and automated workflows across your stack. It plugs into 100+ tools (Salesforce, HubSpot, Slack, Gong, etc.), automates CRM updates, pipeline tasks, and follow-ups, and keeps data synced, accurate, and action-ready.

With Zams, you don’t build complex workflows or maintain connectors. You issue commands in plain language, and Zams handles the behind-the-scenes orchestration via web services, API requests, integration logic, and data routing.

In the context of training vs. test data, Zams ensures your data pipelines stay clean and synchronized across systems, so your machine learning training and test datasets stay aligned with real operational data. That means up to 80% fewer sync errors, less drift, and more confidence in your models’ performance.

Summary

Good machine learning training data is the backbone of machine learning. Understanding the importance of training datasets in machine learning ensures you have the right quality and quantity of training data for training your model.

Now that you understand the difference between training data and test data and why it’s important, you can put your own dataset to work.

Want more? Check out our blog post on the importance of having clean data for machine learning.

If you’re looking for a deep dive on all things AI and machine learning, be sure to check out our Ultimate Guide to Machine Learning.

Messy data slows revenue down. Zams keeps your CRM and data ecosystem clean, connected, and ready for real-time automation - giving your teams the leverage to move faster and scale smarter.

FAQ's

What is the difference between training data and test data in simple terms?

Training data teaches your machine learning model how to recognize patterns and relationships between inputs and outcomes. Test data checks whether those lessons hold true with new, unseen data. Together, they make sure your model learns accurately and performs reliably in real-world scenarios, not just in theory.

Why do we split data into training and test sets?

Splitting data helps prevent overfitting, the most common issue in machine learning. When models train and test on the same data, they memorize rather than learn. By separating the two sets, you can measure true model accuracy and ensure your predictions remain reliable when new data enters the system.

How does validation data improve model performance?

Validation data sits between training and test data. It’s used to fine-tune model parameters and find the sweet spot between underfitting and overfitting. This step ensures that when your model faces test data, it performs consistently and efficiently, something automation platforms like us at Zams value deeply: clean processes, optimized outcomes, and zero guesswork.

How can automation help manage training and test data?

Automation simplifies the repetitive parts of data preparation, cleaning, labeling, and splitting datasets, so teams can focus on strategy and insights. With connected systems, automation ensures your machine learning data stays synchronized across platforms. Zams applies the same principle to CRM and RevOps workflows, removing manual steps so data stays actionable in real time.

What are the characteristics of a good training dataset?

A strong machine learning training dataset is accurate, complete, and representative of real-world diversity. It should include enough examples to reflect true patterns without bias. Quality data ensures algorithms learn correctly, improving prediction accuracy and model reliability, both essential for scalable, high-performing AI systems.e, diverse, representative of real-world cases, and free from duplicate or missing values.

How can data quality impact model performance?

Poor data quality creates blind spots. Missing values, duplicates, or inconsistent formats confuse algorithms, leading to false insights. Clean, standardized data, like the kind maintained through automated systems, keeps models sharp and decisions precise. It’s the foundation of trustworthy AI and efficient operations.

Can training and test data come from different sources?

Yes, as long as both represent the same problem domain. For instance, you can train on customer data from one region and test on another, if patterns are comparable. What matters is balance and alignment. If sources differ too much, accuracy drops. Automation can help maintain consistency across data sources by flagging mismatched structures early.

How do you measure if your model performs well on test data?

Model performance is measured with metrics like accuracy, precision, recall, and F1 score. Each shows how close predictions are to reality. The key is evaluating performance across multiple metrics, not just one, so you capture both correctness and consistency. Automated dashboards can simplify this by generating these metrics in real time.

What happens if you reuse training data for testing?

Reusing training data for testing creates an illusion of success. The model performs well because it’s recognizing data it already knows, not learning new patterns. This leads to overfitting and unreliable performance in production. Always test with unseen data to get a true measure of predictive power.

How does Zams use machine learning training data?

Zams integrates machine learning training data into a connected automation framework. Instead of retraining models manually or relying on inconsistent inputs, Zams keeps your CRM and business systems synchronized - cutting sync errors by up to 80%. The result: faster workflows, higher accuracy, and a data foundation ready for any AI application.